100 times more deployments: How did we achieve Continuous Deployment?

Yes, we develop on the main branch, each commit is treated as a release candidate and each push is expected to reach production. Results: 100 times more deployments, shorter lead and recovery time, less failure rates. But how?

In the recent years I often started to work on projects where no Continuous Delivery and/or Continuous Deployment were in place in the beginning. This article is derived from my experiences on several projects and aims to share how to implement Continuous Deployment. Indeed, let's focus on one of my recent projects, a modernisation of a customer facing website in the automotive industry, there we have increased our number of deployments from 2 to ~200 a month.

Before we go further, let's reflect on the initial state of the application.

- Monolith with older tech stack

- Code freeze every second week

- Tagged Releases

- Manual and some Automated Testing

- Many changes are deployed at the same time.

Problem Statement

Nothing is wrong with the above state. However, we could do better. Living in a fast changing world, it is crucial to serve the needs of the end user in a timely manner and react to their feedback. That also means, to adapt to change faster and easier, core of Agility. Of course it depends on the product, but having cycles of two weeks for fixing a bug or releasing a feature was not fitting into our goals anymore for having a high customer satisfaction and frequent feedback.

Therefore, we triggered the change, not only the product, tools and technologies behind, but also the way of delivering value.

Some Definitions about CI/CD

Now we have an idea about the initial state, I would also like to briefly give some descriptions for CI/CD.

- Continuous Integration: This is about frequently integrating the local development codebase into the trunk or so called main branch.

- Continuous Delivery: Being capable of deploying the latest codebase whenever requested. This means, the codebase on the main branch is always up to date and ready to deploy, but usually it is upon a decision when to deploy to the production environment.

- Continuous Deployment: Every integration into the main branch goes into production without any prior request. From a developer perspective, any push to origin repository of the main branch is expected to be deployed automatically into production (assuming it passes all the quality gates such as automated tests)

Counter Argumentation

What I heard mostly in the beginning of the project(s) could be listed through:

- This is very dangerous.

- Our developers make mistakes. We have to control it.

- We can not do that.

and similar..

Now, let’s move forward to sharing what we applied to achieve Continuous Deployment.

Seeding the Idea

In order to do such a change, it is essential to make people familiar with the idea by talking about what the alternatives can be. I have encouraged devs and together we have conducted several Agile Principles and Best Development Practices sessions where we talked and aligned on certain development principles within team(s). This also includes how Continuous Deployment could serve for many Agile Principles such as "Frequent Delivery", "Measure Progress", "Welcome the change" etc.

Meanwhile, we took actions on the following as well.

Ensuring Team Autonomy

One of the impediments along the way to Continuous Deployment of the existing application is its nature: One big application where several teams work and commit together and deploy On-Prem.

Micro-Services over Monolith

To enable team autonomy, usually it could make sense to split these monolith applications into smaller pieces based on domains, where there is little or no interaction in between but a more clear responsibility for teams. Finding such a solution can sound easier but could be hard when it comes to apply. The key point here is not to over-engineer, but focusing on the value and keeping solutions simple. There is no single answer, rather case by case evaluation is necessary.

Our initial application was a big monolith with old tech stack and now divided by domains to enable team autonomy with modern languages and tools. For many cases in my projects like this one, it made sense to go further with the microservices. As of now, a team is not waiting for another anymore for deploying changes as it is less dependent and this is a huge step towards frequent deployments, i.e. Continuous Deployment.

Cloud over On-Prem

It is not uncommon to have some approval processes if an application is deployed into On-Prem environments. In general these steps are handled by a particular team or department. This may sound more convenient for product teams but it is often more dependency and waiting time in reality.

A catalyser here for help is the cloud environment where the team could be solely responsible for the infrastructure running. This means, there is no long approval process for adding or removing services like in On-Prem. However to achieve this, the team needs to have certain cloud, security and other non functional requirement skills. Adding or gaining the skills by the team as well as up-skilling the members could be some of the ways to go.

In this project, we implemented the applications in the cloud such as AWS which enabled certain team autonomy besides other advantages such as scalability and availability. This was a big step towards the Continuous Deployment, as there were no such impediments for deployment of new services anymore.

Ensuring Quality

The sooner the better! To ensure CI/CD and removing manual steps in between, quality assurance should also be automated and distributed over several steps from the beginning.

One of the arguments or fears against Continuous Deployment is missing quality checks. In the former approach, there is manual testing and code reviews to ensure certain quality. But there are other ways around that. What did we do?

Trunk Based Development over Feature Branches

Development on the feature branches can be seen as convenient, but it could have following downsides.

- The devs may not often update the feature branch, as this could lead to conflicts.

- The code to integrate at a point to the main branch could get very big.

- It could be hard to observe the impact of the merge into the main branch due to size of change.

Trunk Based development is on the other hand helping to solve these problems. All the devs develop on the main branch, every single change is reflected on other developments once the code is pushed or updated. Changes tend to be frequent but small by nature, which is easier to manage and serves the incremental development idea.

Introducing Feature Toggles

As in the new setup, the features are also deployed during development, it is also likely that it is not ready to be presented to the end user. Feature toggles help here to experience the progress in another environment, i.e. Stage, but keep the changes invisible to the end user by disabling it in the production environment.

This way, it enabled us to actually feel how the changes look like in another environment rather than production already. We have seen progress day by day and it helped us to mitigate the problems earlier.

Pairing over Pull Requests (PRs)

PRs are of course one way of ensuring quality. To put simply, a developer develops code on a branch for some time and when she finishes her code, she creates a Pull Request in order for her code to be reviewed. After the review finished, the code could be merged into the main branch and shipped.

However, it has the following downsides.

- Waiting time for the review

- Ping - Pong between the comments, fixes and yet another comment.

- Knowledge sharing may not be very effective through reviews.

Pairing is a different approach where there are no code reviews, rather often two devs work together and develop code. Indeed, Pairing has the following advantages:

- No waiting time for code reviews, it is done during development

- Knowledge sharing is more effective pairs are part of development

- Quality is ensured during development, not afterwards.

While it has certain benefits, pairing can also come with challenges. It could be tiring and not everybody is confident about it, especially in the beginning. But these could be handled in time.

So what did we do? From the beginning of the project(s), we have encouraged Pairing, where it helped to have a certain level of quality and enhanced knowledge sharing. We did not need any more PRs, because the pairs were ensuring the 4-eyes principle and together with trunk based development and well testing, we were confident to go further.

Full Automation and TDD over Manual Testing

Another important aspect of enabling quality and sustainable development is Test Driven Development (TDD). In TDD, tests are written before the implementation and the implementation is done afterwards to fix the failing test. This helps to ensure certain test coverage, understand the requirements from the developed feature better and challenge the development for a higher quality.

User tests could be already automated through end to end (E2E). To ensure that changes are not breaking other teams applications, Consumer Driven Contract Testing could be implemented.

All of the above requires certain effort in the beginning and detailed information is also out of scope of this post. But the value they bring could be worth the effort, because they eliminate the long term effort and human factor of manual testing.

Now it is time take a step back and look at the overall picture.

What does the whole development process look like?

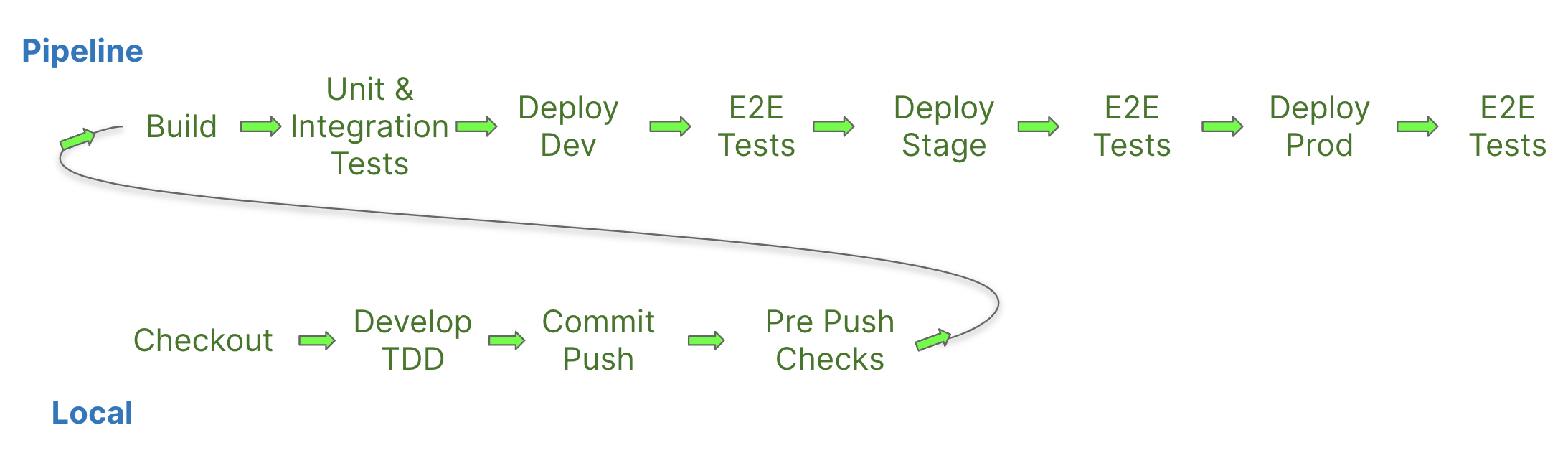

To illustrate the steps of development and deployment a bit better, below is a pipeline.

A mature pipeline with certain test coverage is more likely to catch and mitigate errors.

Since the development is already done incrementally in small steps and it takes minutes to deploy to production, the fix is likely to be a small one and fast to deploy.

Definitions of Done (DoD) and Acceptance Criteria over Unstructured Description

Code quality is just one part of the overall quality. Quality starts indeed with the problem statement. A well defined ticket is necessary and serves for success. Otherwise it could lead to long lasting durations.

To avoid that, we have aligned on clarifying Acceptance Criteria. i.e. all necessary things to implement one by one and Definition of Done, i.e. a feature toggle is removed or certain non functional requirements are met such as performance.

Result

As we apply these changes, we have achieved a better result based on Four Key Metrics; such as

- Deployment Frequency: Increased from 2 to ~200 a month

- MTTR: It decreased from days to minutes as we got rid of PRs and Manual Tests.

- Change Lead Time: On the average 2-3 weeks less because it takes 15 minutes for a change to reach production instead of weeks.

- Change Failure Rate: Almost 100 times less, since the deployment rate is much higher, but error count is around the same.

Summary

To enable Continuous Deployment, we did trainings and seeded the idea of Agile Principles and Best Development Practices while we preferred and applied:

- Microservices over Monolith

- Cloud over On-Prem

- Trunk Based Development + Feature Toggles over Feature Branches

- Pairing over Pull Requests

- Automated Testing + TDD over Manual Testing

- DoD + Acceptance Criteria over Unstructured Description

- Being Happy :)

Of course, this is not the only way to achieve a better value delivery, these are dependent on the nature of the projects and something else could be a better fit for different cases. However, this or similar approaches were helpful in several projects around me and improved the overall delivery process significantly.

I hope you enjoyed reading and now have some more insight about how we apply CI/CD.

Attribution

Cover Photo by Nataliya Vaitkevich from Pexel

Other images are self work.